By Mark J. Davis, Ph.D, Responsible AI Fellow

Adolescent students today are saturated by AI-controlled content impacting the way they interact with their peers and the world. How many children really understand the assumptions that come with these systems and how AI could impact us differently? As educators, we find it essential to help students develop critical thinking to discuss the technology they engage with every day. Using two educational techniques, specifically film as text and the Socratic seminar method, we can support students developing deeper connections to AI in their world.

The film “Ron’s Gone Wrong” provides an entry point into conversations about AI and ethics with students of all ages. On the surface, the film is an animated tale of friendship and belonging. At a deeper level, learners can examine issues of privilege, inclusion, and data privacy and safety in the age of artificial intelligence. Using this film as the primary text, I have engaged adolescent students with sophisticated AI concepts via the characters of relatable situations.

Why Film as Text is a Useful Tool for AI Education

CS education from a traditional perspective emphasizes coding and technical aspects of AI. In parallel, digital and media literacy advances the capability to examine the assumptions and implications that AI models can have on different stakeholders. The connection between Humans and AI, addressed in the AI Learning Priorities for All K-12 Students from the CSTA and AI4K12 makes this even more relevant.

Film speaks the language of modern students who regularly watch their stories unfold daily on screens. By treating a film like a text deserving close attention, these texts allow us to validate cultural experience while simultaneously developing literacy skills. In “Ron’s Gone Wrong,” AI-driven technology in the form of companion robots parallels real social media platforms and AI assistants. This provides a foundation that helps to ground abstract ideas into concrete points of interest.

The Instructional Process

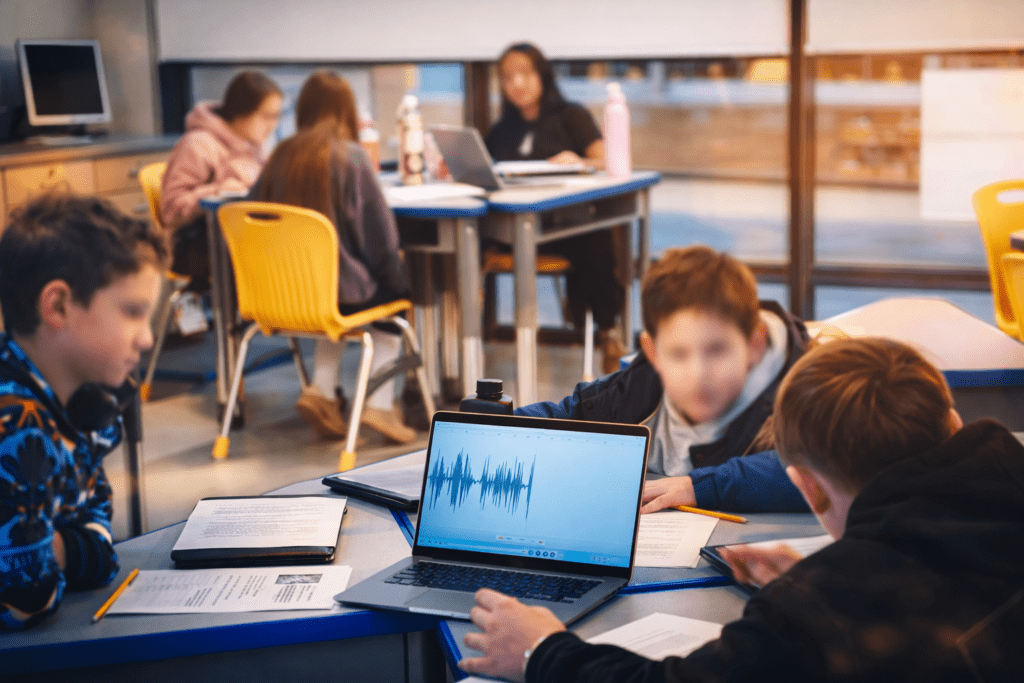

The unit plan is easily differentiated while remaining pedagogically advanced. Students go from passive viewing into active critical thinking, while analyzing characters and events in support of a real-world application. Though the timeframe can vary, my experience with sixth-grade learners was designed into a six-day experience.

Days 1 and 2 involve film viewing with guided note taking from the perspective of one self-selected character. Students will watch and annotate key experiences for the character on a viewing guide. The students witness the consequences of AI technology, social dynamics, and character relationships, while documenting moments that parallel real life. The guided notes keep them engaged beyond being entertained and push them to think analytically about what they are watching.

Days 3 and 4 are the perspective preparation, examined through homogenous groupings based on their character. Students’ self-selected characters are rooted in the film’s archetype: the Outsider (Barney Pudowski), the Bully (Rich Belcher), the Influencer (Savannah Meades) or the Traditionalists (Graham and Donka Pudowski). This preparation consists of analyzing the character’s experience through the three AI themes of privilege, inclusion, and protection. Students gather evidence from the film and discuss different viewpoints to create an argument from the character’s perspective.

Day 4 builds on the character analysis in a Socratic seminar where students debate in heterogenous groups about AI ethics while remaining in character. Students can discuss controversial concepts or question assumptions, while holding onto a little distance by role-playing as their character. A student might hesitate to say “AI algorithms favor wealthy people” in their own voice, but as Barney or Rich, they can explore that awkward truth with greater freedom.

Day 5 allows students to review their findings from the seminar and then create a real-world application. Students collaborate as a team to develop an AI policy and vision statement for their fictional Nonsuch Middle School, formed by the three themes. They develop policies that support equitable access, genuine human connection, and data security. The process parallels the behaviors of real learning communities balancing between healthy curiosity and restricted engagement with AI.

Day 6 is a presentation day where students exhibit their policy and are supported by anonymous peer feedback. The mock policy is included as evidence in a digital portfolio for Computer Science Proficiency in a section on Responsible Computing in Society. Students submit their policy to the community’s stakeholders, including an AI Task Force, to support student voice to future policy and curriculum design.

Thematic Connections that Matter

The lesson addressed the three themes that are present both in the movie and in practical AI use: privilege and access, inclusion and friendship, and data privacy and protection.

In privilege, students examine issues of access and opportunity to engage in technology. Questions include “Who can access and who is excluded from B*Bots?” This helps students reflect on real-world technological inequality. For instance, Barney struggled to afford a B*Bot, which examines the belief that privilege is only granted to people with financial access to technology.

The inclusion theme explores how AI impacts human relationships. The movie shows them accessing social tools in public spaces. However, the characters are isolated in digital bubbles, constantly observing one another and their B*Bots using social media support systems for confirmation. Students identify with this pattern in their own lives, making their experience more culturally relevant.

Protection involves data privacy and safety. The B*Bots gather a large amount of personal information to ensure efficient friendship, but at what price? When does personalization become surveillance? These questions reflect fears about the way tech companies exploit student data and create anxiety and pressure to participate in a digital experience.

Why This Approach Works

The lesson works on several levels including meeting students where they are, using media they understand to examine ideas that influence their lives, and fostering critical thinking with evidence. The process links abstract principles of AI to concrete matters of modern society. Students also learn to view themselves as stakeholders in AI design and deployment.

The Socratic seminar format is especially useful for this generation of digital producers. Students build understanding through discussion, rather than passively absorbing information. They learn to substantiate claims and develop evidence, consider a variety of opinions, and reflect on the knowledge and experience.

Teaching AI ethics through film presents students with opportunities to discuss the impact that technology has on the way we live while cultivating the critical thinking skills that are needed to be our digital and citizen leaders in the future.

You are encouraged to try these materials under a Creative Commons Attributions (CC-BY) license from this Google Drive. The materials created include modifiable student handouts, response examples, and a teacher’s guide, so even if you are new to film based or Socratic lessons, you can start almost immediately.

About the Author

Mark J. Davis is a passionate Digital Literacy educator and advocate with more than two decades of experience serving in K–12 public education. Holding a Ph.D. in Education with a specialization in infographic literacy, he blends academic expertise with practical classroom insight to help students and educators navigate today’s complex media landscape. Mark is also a researcher with the Media Education Lab, where he contributes to advancing scholarship and resources in media and digital literacy. He lives in Massachusetts with his wife and two children, where he continues to champion the critical role of literacy in a digital age.