By Dr. Kip Glazer

Introduction

On Aug. 9, 2023, I had the pleasure of attending a convening hosted by the EngageAI Institute that included the researchers, practitioners, and industry leaders. During that meeting, TeachFX Founder Jamie Poskin mentioned that AI made him think about when scantrons were developed. He mentioned how the Scantron, while solving an efficiency problem for teachers, allowed the proliferation of the standardized tests. According to Poskin, testing data generated by the use of Scantrons has been used to harm whole communities of learners. He compared the use of Artificial Intelligence (AI) to what I now call “the Scantron Problem,” i.e., when a great tech solution creates harmful and unanticipated consequences and we will all be negatively impacted.

Clear Definition: Generative AI (like ChatGPT) is not the only AI!

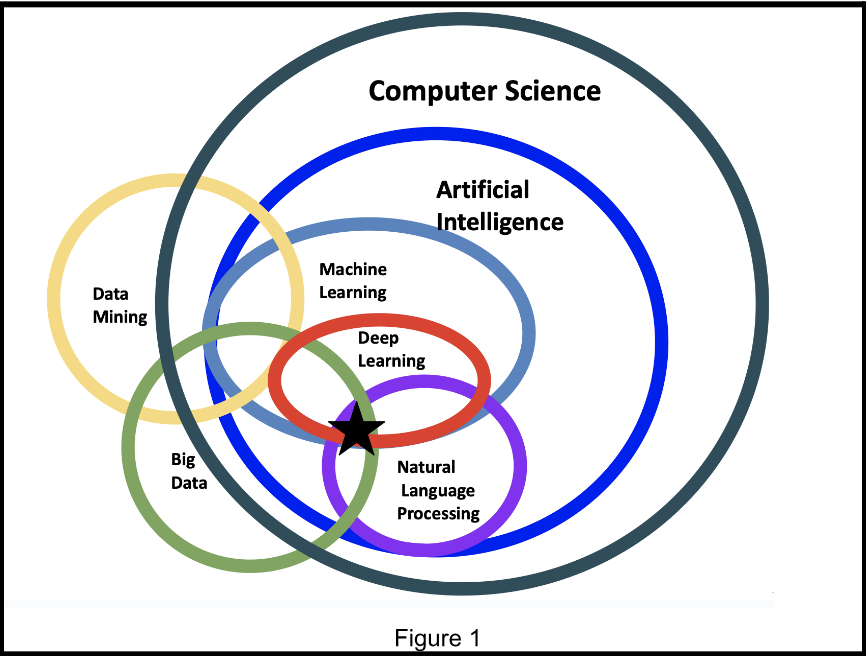

Before discussing the impact of AI, it is important that we have a clear definition of what AI is. Since its release in November of 2022, ChatGPT has become synonymous with AI in the public’s mind. This simplification and mislabeling are extremely problematic because ChatGPT, a type of large language model (LLM), is a small part of the field of AI (Ruiz et al., Figure 1). Based on the the newest White House Executive Order, ChatGPT is also considered “Generative AI [which is] the class of AI models that emulate the structure and characteristics of input data in order to generate derived synthetic content. This can include images, videos, audio, text, and other digital content” (The White House, 2023). Understanding where ChatGPT fits is extremely important as we continue to learn more about AI and its use in the educational ecosystem.

So, what exactly is AI? According to the Office of Educational Technology (OET), “AI can be defined as ‘automation based on associations’” (U.S. Department of Education, 1). Such a definition is extremely useful in distinguishing AI from conventional educational technology tools. According to OET, AI’s capability in “detecting patterns and automating decisions” (U.S. Department of Education, 1) is why all of us, in particular educators and school leaders, must be vigilant in its use as it may “lead to bias in how patterns are detected and unfairness in how decisions are automated” (U.S. Department of Education, 1). In addition, we should all be mindful of the emulative and derivative nature of the digital products generated by the generative AI tools that is in direct contrast to the creative and original nature of their creators and users, i.e. the humans.

Examining the Underlying Philosophy:Is More or Faster Always Better?

As we consider incorporating AI into our educational ecosystem, it is important for all of us to recognize our underlying assumptions. Because AI can automate, we will certainly be able to get more done more quickly than before. However, whether we should always do that is something all of us should carefully consider. In addition, many tools are developed based on what Meredith Broussard calls, “technochauvinism…a presumption that the most advanced technological solution is inherently the best one” (Thompson, 2018). During her keynote at the recent CIRCLS Convening, Broussard cautioned us against anthropomorphizing AI. She argued that AI is mathematics, and we should treat it as such. I would go a step further to say that educators should actively resist the pressure to automate what should never be automated such as sociocultural experiences that are core to learning and what make us human.

Avoiding the Future Scantron Problem

How can educators avoid creating yet another Scantron Problem when it comes to AI? Below are three recommendations.

- Play not pay

As we have experienced during the pandemic, educators will be approached to adopt AI tools rapidly. Before you pay for any tools, I recommend that you experiment with various tools. These tools are changing so rapidly, paying for any expensive tools would not be prudent.

- Focus on data security and privacy

As you explore with these tools, be sure to never include any sensitive and confidential information. Treat any AI tool as a social media account or even the front page of a major newspaper. If you would not want to see something on the front page of a major newspaper, you should not add that to the tool. This is particularly important when you are handling your student’s information.

- Educate yourself

Whether you want it or not, AI is here to stay. As such, you must educate yourself. I recommend that you start with the Glossary of Terms (Ruiz and Fusco, 2023). You should also consider reading the OET’s Artificial Intelligence and the Future of Teaching and Learning.

Conclusion

As a person who loves technology, I certainly see a great potential for AI in education. When I visited the tillt lab at Northwestern University, I spoke to several Ph.D. students working on developing AI-enabled tools rooted in sound learning theories. I was inspired to see the future potential of AI-enabled tools that could ensure equity of participation and enhance learning experiences for all students. Because of the ubiquity of AI, it will certainly be up to all of us to ensure that the positive attributes of AI outshine the potential negative consequences. Just as the proliferation of scantrons created unintended consequences, the rapid proliferation of AI has already forced humans to confront many ethical issues. AI in education will definitely transform what it means to be a learned person as it will continue to push the boundaries of and for learning. Resisting the temptation to abdicate our moral and ethical responsibilities in exchange for convenience and speed will require intentional efforts from all of us.

About the Author

Kip Glazer is a long-time public educator and school administrator who is passionate about the proper use of technology for teaching and learning. Currently Principal in Mountain View, CA, she holds a Doctorate in Learning Technologies from Pepperdine. Her dissertation highlighted how game creation improves students’ literacy and numeracy skills. As a public educator, she believes in the mission of public education of creating an educated populace for the protection of democracy, and she has worked on elevating practitioner voices in the educational technology space to fulfill that mission. She was a panel member for the webinar hosted by the US Department of Education on their launch of “Artificial Intelligence and the Future of Teaching and Learning,” (https://tech.ed.gov/ai-future-of-teaching-and-learning/) and has joined as a member of the Practitioner Advisory Board (https://engageai.org/who-we-are/our-team/) for Engage AI Institute. As proud as she is of her professional accomplishments, she is most proud of her two sons who graduated from West Point and are currently serving in the Army.

References

Cook, J. (2023, July 27). Why ChatGPT Is Making Us Less Intelligent: 6 Key Reasons

Ruiz, P., & Fusco, J. (2023, July 19). Glossary of Artificial Intelligence Terms for Educators. CIRCLS. https://circls.org/educatorcircls/ai-glossary

Ruiz, P., Fusco, J., Glazer, K. & Loftin, S. (2023, March 20-23). Insights on AI and the Future of Teaching and Learning [Paper presentation]. CoSN 2023 Conference, Austin, TX, United States.

The White House. (2023, October 30). Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

Thompson, D. (2018, September 30). How Technochauvinism Derailed the Digital Future. The Atlantic. Retrieved from https://www.theatlantic.com/technology/archive/2018/09/tech-was-supposed-to-be-societys-great-equalizer-what-happened/571660/

U.S. Department of Education. (2020). Artificial Intelligence and the Future of Teaching and Learning. Office of Educational Technology